Your tests should be based on questions that you need to know the answer to

Marketing testing plan

Testing is a word that we fundraisers and marketers use a lot, but I don’t see a lot of robust testing plans and I don’t see a lot of re-testing of the same thing.

We can test

- channels

- tactics

- strategies

- messaging

- call to actions and asks

- and we can test a platform or journey or conversion funnel.

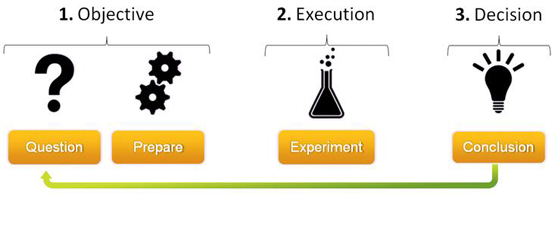

Testing to answer a question

But for me, testing is about having a question you want to have answered. And having an idea of what you would do if you knew the answer to the question.

Test with a purpose, not just because.

And only test one thing at a time – otherwise you can’t know what element it was that delivered the uplift or downturn in results.

Fundraisers love to test, but I see a lot of charities that run ‘A Test’, and if the test doesn’t work, they’re quite quick to abandon a strategy. Rather than revisit the strategy and critically analyse it to see if the strategy was the problem, or the story, or the process?

If a new project, program or approach is tested and doesn’t work, often suppliers are blamed (we didn’t have the right telemarketing partner) or the timing was wrong (we were too slow getting our program out and missed the boat) or it was a brand awareness issue (there were bigger brands in market that can’t compete with). And perhaps these things are all true, but there is likely some other reasons why the people you did reach, chose not to convert. Have you tested the value proposition, the story and its emotional impact or the ease of your experience?

Testing is a long term strategy that should be planned.

Of course you can test on the fly when you have an idea or concept you want to prove or deny, but when you do this it should feed into the bigger plan of what you need to achieve.

Create a test plan

1. What questions do you have, that you don’t know the answer to?

i.e. Does a colour image get better click through rates than a black and white image?

Do stronger Click Through Rate (CTR%) correlate to a better conversion rate (CR%)?

2. What elements do you need to test to answer your questions?

How many tests do you need to run to answer your question?

Is it volume of responses? Or number of tests? Or % of people/ database?

3. How do you conduct these tests?

- AB Split test

- At channel/ source

- At point of Conversion

- Content or functionality

- Segment test

- Same Content, different audience

- Same Audience, different content

- Dynamic content tests

- Timing of messages

- Frequency of communications

4. Reporting and measuring your test

- What is the benchmark we need to decide if the success is positive or negative?

- What do the results tell us?

- How do results compare to expectations?

- How are we going respond to what we’ve learned?

- Do we have enough information?

5. What is our new testing plan?

What learnings did we get from how we planned our last tests. Will we do it differently?

Test often, but have a plan

Testing should be encouraged to force us to think differently and challenge what we think we know.

Digital changes every year – what works now, won’t work in 2 years.

But we need to have a set of questions we need answers to, rather than randomly testing and hoping insights will drop into our laps. Because when you’ve been doing something a long time, we can often make a pretty accurate educated guess.

So our testing needs to tell us something our experience doesn’t.

Next time you hear yourself or someone else say “we should test that”, smile and ask them politely,

“what question will it answer for you? And if you knew the answer now, what would you do with that information?”